Do you remember how your website looked when you first saw it live? And how much work did you do than to plan the structure of the website?

However good the plan was, over time more and newer content is added, new topics are opened up, and the formerly organized website becomes a hodgepodge of content and products. A spring cleaning can help bring more order to your website.

First, it's about capturing existing content (content inventory) and then analyzing and optimizing the information architecture. Both together lead to an even better website for users and search engines.

Content inventory and keyword mapping: what content is there anyway?

Before the spring cleaning can begin, you first have to get a comprehensive overview of your website.

Since very few webmasters have created and maintained a keyword map, that is an overview of topics and landing pages; it is usually not so easy to answer the question of what content you have on your website.

A keyword map can be z. For example, you might want to use a simple table that lists the page topics (for example, information architecture) and maps exactly one address to each.

It's best to take a look at the table at the end of this article on topic clusters, But what to do if you do not currently have such an overview? How can you create such an overview with as little effort as possible?

Create content inventory using crawlers by viewing page titles & co

If your content has been well-optimized for search engines in the past, then it's enough to look at the page title, address, and the main heading to capture the topic of a page.

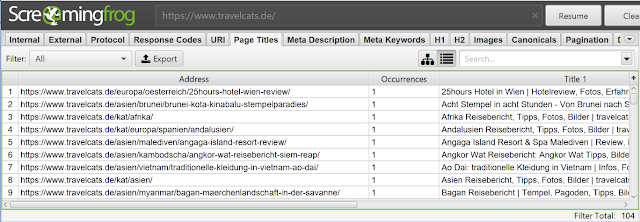

You do not have to gather this data by hand because that's what crawlers can do for you. Tools like Screaming Frog (free for up to 500 addresses) or Beam Us Up (free of charge) can help you with this:

Alternatively, you can use crawlers such as Ryte, Sitebulb, Deepcrawl, or Audisto. The list is far from complete! No matter which tool you use, you'll get an overview like this:

The data collected by the crawler can be exported in tabular form for further processing.

If you can not deduce the page subject based on page title, title, URL and Co., then you have the option to determine the topic by reading an article or analyzing existing rankings.

A tip: Technically savvy webmasters, however, can also use WDF-IDF tools to determine the most important terms of a page automatically. But again, if the text is not well-optimized, then tools find it hard to provide matching results.

Use SEO tools for content inventory

Of course, crawlers are SEO tools as well, but why not resort to tools that tell you which keywords a page is currently on?

In addition to paid tools like Sistrix, SEMrush or Searchmetrics, the Google Search Console offers a free alternative.

Within the performance report, you can see which search terms have been used to make calls to individual addresses.

It's a good idea to use tools like Search Analytics for Sheets to get the data directly from the search query (keyword) to page using the Google Search Console API.

However, the method has one drawback: Most of the time, only a fraction of all pages on a website have any significant traffic - via search engines and also in total.

For addresses without significant rankings, of course, always the question arises why this is so. Is it due to on-page issues and therefore missing relevance, for example, because important page elements have been poorly optimized?

Or is it due to duplicated content or even because the keyword research was forgotten? Here usually only a trained look at the website and the individual pages helps.

Structure analysis: How well is a page accessible via links?

You will always be successful in search engines if you offer content that is well adapted to user needs and easily accessible - both within your website and on the web as a whole.

Then come together relevance (on-page optimization), and popularity (off-page optimization) and your content are in the scramble for the top positions for sought-after keywords prepared.

If you've found out what content you have through a content inventory, you should ask yourself which of these topics matter to you.

This is followed by the question of whether your website structure also reflects this. Because it should be your goal to make important addresses as frequently and directly as possible.

You should also make sure that the anchor texts summarize the page content for users understandable and entice them to click.

For example, an anchor text such as "Pillar Pages" describes the content of this linked address better than "here."

Now you may be wondering how to start with the least possible effort, structure analysis and your current information architecture (or site structure) analyze can. The answer: again with crawlers.

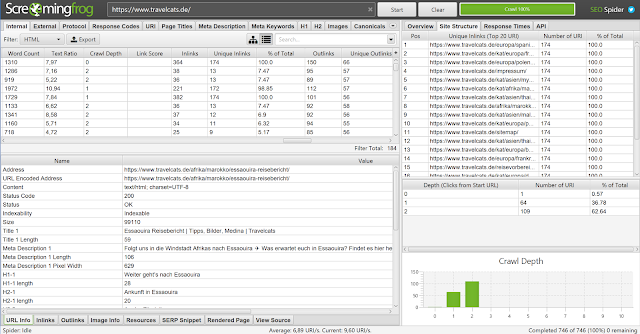

Again take the Screaming Frog (yes, one of my favourite SEO tools!).

The tool shows you for each individual address:

- Crawl Depth: With how many clicks is the address accessible from the start page on the shortest possible path? A linked directly from the home page is therefore at page level 1.

- Unique Inlinks: How many internal links point to the address if only one link is counted per linking URL (address)?

- In links (number of incoming links): How many links are there in total when multiple links are counted?

- Unique Outlinks: How many addresses do this page reach if each link destination is scored only once?

- Outlinks: How many outbound links are there on the page as a whole?

Also, Screaming Frog shows in the right pane under "Site Structure" which addresses are most frequently linked and how many clicks are needed by the shortest route to reach each address.

With the website travelcats.de shown in the screenshot, every document can be reached within a maximum of two clicks - an excellent value.

Here, however, comes the advantage that the page has less than 200 pages. For content-rich sites, it's not atypical when it takes a significant two-digit number of clicks to reach each address on the shortest click-through path.

Of course, you can also look at the anchor texts with which pages are linked. You can take a closer look at the inbound links. For proper internal anchor texts, it's best to use phrases that are relevant to the landing page.

So what should you look for? Your main pages should be ...

- be reached with a few clicks from the start page, so have a low click depth.

- As often as possible be accessible from similar sites, so have many unique links in the most suitable environment.

- Belong to the most frequently linked addresses of the website.

- With content-describing anchor texts or alt texts (if the link is set on an image).

If you do not yet meet these requirements for individual addresses, the following tips will help you to improve the information architecture of your website.

Because better accessibility, assuming appropriate relevance of the landing page, often leads to better ranking in the search results. In this context, make sure that links tend to be more valuable if the page itself is very well linked both internally and externally.

Are there any pages on your website that are strongly linked internally, but that make only a few addresses on your website accessible?

A little tip on the side: Many crawlers allow to directly query data from SEO tools while crawling. For example, "Screaming Frog" can directly query Google Search Console data.

Four methods to optimize the website structure

Make important pages directly accessible from the start page

Usually, it is the start page, which is most often linked internally and externally. Accordingly, this address is a very strong distributor site, on which many visitors regularly land.

Use the power of this page to link your important addresses directly. However, be quite stingy when selecting the addresses to be linked.

Here is the principle "less is more" because the more pages are linked, the less authority is passed on the link.

Use the main navigation or the footer to link important addresses

An easy way to generate more internal links for a page are the elements that are identical on many or even all sides.

Mostly these are the main navigation or the footer area. Both sides are important for structure optimization and a good SEO tool.

Rethink the sorting on overview pages

Especially blogs tend to sort content by date only and to display new ones before older ones. From an SEO point of view, what makes sense for websites with daily updates such as newspapers has a decisive disadvantage: older articles tend to be internally less accessible with each new article - and as a result, often decline in the search engine ranking.

Because why should Google & Co. think that content is relevant if it is difficult to access within the website? And why should an older article be less important overall?

It's best to reserve a space on your website that makes your Evergreen articles easily accessible. How about, for example, an area with links to the most frequently read articles?

Use additional categories to distribute content better

The structure of your website can be useful if you create relevant subcategories and not group all content under a single top category.

These finer categories may also help you to cover new relevant keywords! If, for example, you have summarized many contents first under "SEO," but then notice that you also have many articles for " on-page SEO," then this could be a useful subcategory.

Conclusion: Do not leave your SEO ranking to chance

With the tools presented in hand, it should be easier for you to land higher up in organic search results.

Always make sure that your most important pages are not just good for on-page SEO, but easy to find by your visitors within your site.

If you want to go even further, you can follow a content inventory with a content audit, in which the contents are examined in more detail.

Which content would need to be updated? Which pages are too similar and should be merged into one article? Which contents still have gaps that should be closed?

Where do competitors offer much more information and may this be higher in the search results? Or are there even articles that can be deleted entirely from the website?

Dare to remove content that is no longer relevant. Because the higher the percentage of quality content in all the content on your site, the more relevant your site is to users and search engines.

This comment has been removed by the author.

ReplyDelete